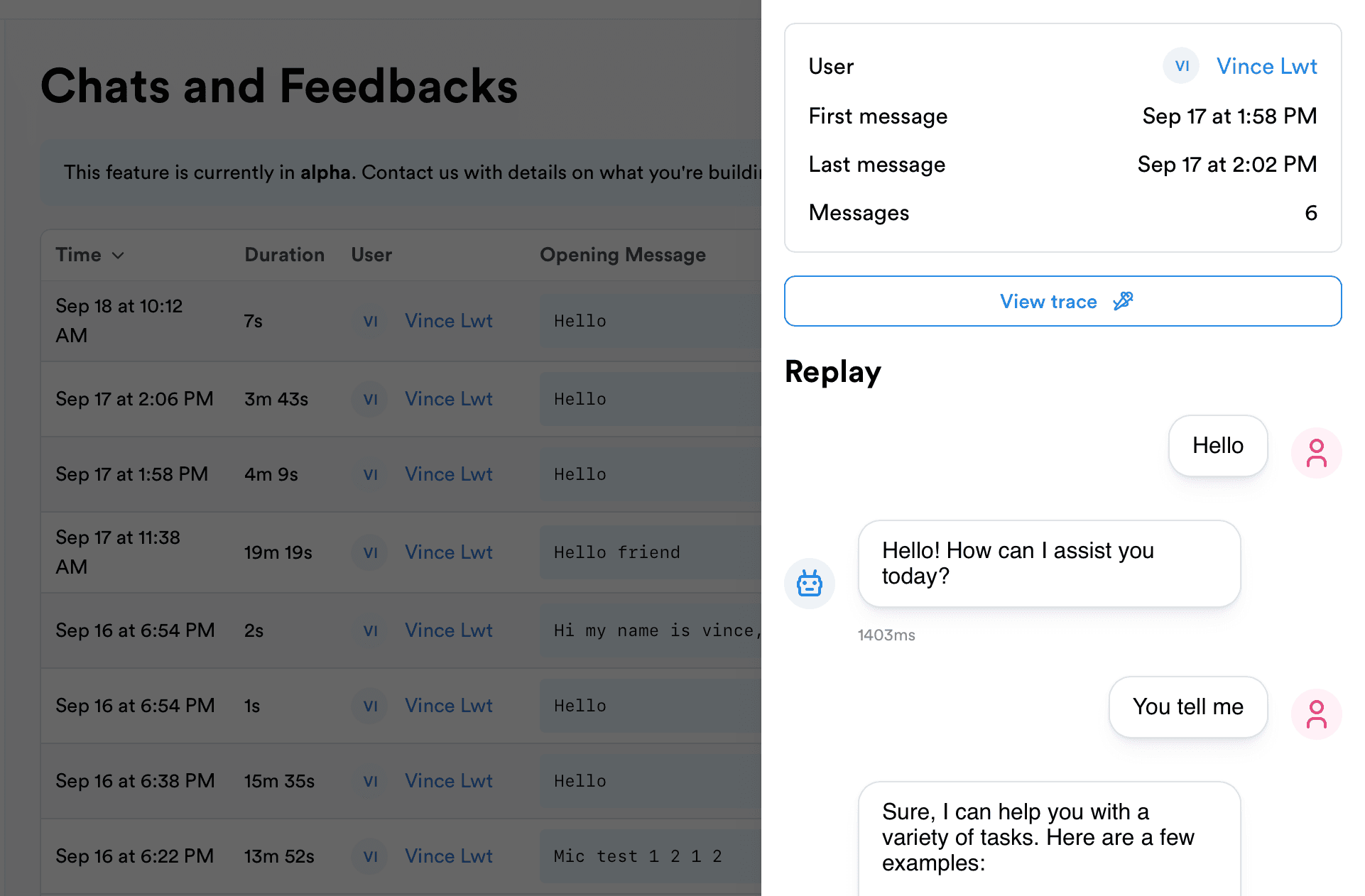

Chats & Threads

Record and replay chat conversations in your chatbot app. Helps you understand where your chatbot falls short and how to improve it.

Chats integrate seamlessly with traces by reconciliating messages with LLM calls and agents.

You can record chats in the backend or directly on the frontend if it's easier for you.

Setup the SDK

Open a thread

Start by opening a thread.

You can resume an existing thread by passing an ID from an existing thread.

You can also add tags to a thread by passing a object with a tags param:

Track messages

Now you can track messages. The supported roles are assistant, user, system, & tool.

Capture user feedback

Finally, you can track user feedback on bot replies:

The ID is the same as the one returned by trackMessage.

To remove feedback, pass null as the feedback data.

Reconciliate with LLM calls & agents

To take full advantage of Lunary's tracing capabilities, you can reconcile your LLM and agents runs with the messages.

We will automatically reconciliate messages with runs.

If you're using LangChain or agents behind your chatbot, you can inject the current message id into context as a parent:

Note that it's safe to pass the message ID from your frontend to your backend, if you're tracking chats directly on the frontend for example.